BINARY TREEA binary tree is a tree data structure in which each node has atmost two child nodes. The child nodes may contain references to their parent nodes. There is a root node, which has atmost two children but no parent.

According to Graph Theory, a binary tree can be also said to be a connected, acyclic graph data structure, with a maximum degree of three for each vertex.

TERMS ASSOCIATED WITH A BINARY TREE- The root node of a tree is the node with no parents. There is at most one root node in a rooted tree.

- A node with no children is called leaf node.

- The depth of a node is the length of the path from the node to the root. All nodes at the same depth are said to be in the same level. The root node is at level 0.

- The height of a tree is the length of the path from the node which has the highest depth, to the root node.

- The children of the same parent are called siblings.

- A node is an ancestor of another node, if it comes in the path traced from the other node to the root.

- A node is the descendant of another node, if it is the child of the other node, at some level from it.

- The size of a node is the number of descendants it has including itself.

- A perfect binary tree is a full binary tree in which all leaves are at the same depth or same level. (This is ambiguously also called a complete binary tree.)

- A complete binary tree is a binary tree in which every level, except possibly the last, is completely filled, and all nodes are as far left as possible.

- A balanced binary tree is where the depth of all the leaves differs by at most 1. This depth is equal to the integer part of log2(n) where n is the number of nodes on the balanced tree.

BINARY SEARCH TREEA binary search tree is a binary tree in which the numerical value of the data field of the left child is lesser than that of the parent, which is in turn lesser than that of the right child. In short,

(data of left child) < (data of parent) < (data of right child)

[numerical value of data is taken]

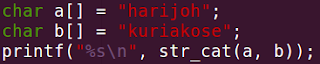

For example,

Here, in both the trees, data is input in the same order, i.e, 34, 43, 56, 21. Searching in the binary tree is evidently very easier in the BST, hence the name binary search tree.

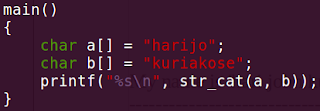

Each node in a binary search tree can be represented by a structure in C, created with the ‘struct’ keyword, typically named ‘node’. In the most simple case, the data field is taken as an integer. There are three pointers associated with each node, whose typical names can be parent, lchild and rchild, of type ‘struct node’ itself. These three pointers also make the structure ‘node’ a

self-referential data structure. Obviously, the parent pointer in the structure representing the root node, will be NULL.

The definition for each node will be:

struct node { int data; struct node *parent; struct node *lchild; struct node *rchild; };The names for the fields are self explanatory, and arbitrarily taken.

(The ‘parent’ pointer points to the parent of a node. It is necessary in an AVL tree only.)

BASIC FUNCTIONS FOR THE BSTSince the binary search tree is a dynamic data structure, the malloc() and free() C standard library functions are used to allocate memory for new nodes while insertion, and to deallocate memory while deleting nodes, respectively. Only the following basic functions are needed to implement the full functionalities associated with a BST.

Constructor -> For creating a new node in the BST

Insert -> For inserting the new node into the BST and

setting up the links

Traverse Inorder -> For inorder traversal through the BST

(for each node, print

LnR)

Traverse Preorder -> For preorder traversal through the BST

(for each node, print

nLR)

Traverse Postorder -> For postorder traversal through the BST

(for each node, print

LRn)

Find -> For returning the node whose data field is the

given numerical value

Delete -> For deleting a node from the BST.

where ’

n’ denotes the current node, ’

L’ the left child, and ’

R’ the right child. (thanks to Shijith for this one …)

N.B. For deletion, if the node is a leaf, just delete it. Otherwise, if the node

has a right child, replace the node with its successor node in the

inorder representation of the BST. Else, replace the node with its

predecessor in the same inorder representation.

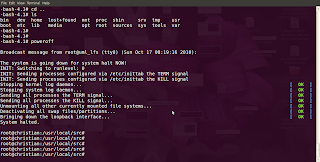

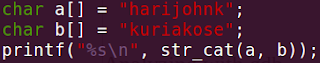

BALANCING A BSTThe BST seems efficient in searching for a particular data. But it is not so always. When there are large number of nodes, there is the possibility that a devastating situation, as shown below, may occur.

In the second BST, data was input in the order 21, 20, 34, 37, 43, 56. Hence, the structure. According to the BST terminology, a structure like this is said to be unbalanced.

N.B. A BST is said to be balanced, when the depth of any two leaves in

the BST differs atmost by 1. Also, a balanced tree will be

theoretically more efficient in all situations, while an unbalanced tree

will be not.

Why is an

unbalanced BST inefficient? For mainly two reasons.

1) The time complexity for searching some data values becomes much

greater than expected. For example, in the first BST, 43 can be found in

two steps of traversal. In the second one, it takes an unexpected 4 steps.

For very large BSTs, the time complexity increases drastically.

2) The lesser the number of levels in the tree, the more efficient the storage.

When operations like deletion are performed on large BSTs, there is

a high possibility that the actual addresses of the nodes are spread out

over a large area of the storage memory (secondary memory).

How to

balance a BST?

There are many techniques available, but in all of them, the simple rule is to keep the difference between the depth of any two leaves atmost 1. The BST can be balanced at a particular point in time as desired, or it can be done while insertion into and deletion from the BST. Such BSTs that do “balanced” insertion and “balanced” deletion are called

self-balancing binary search trees.

THE AVL TREEThe AVL tree is one of the many kinds of a self-balancing binary search tree. It was invented by

G. M. Adelson-Velskii amd

E. M. Landis.

Short Bio of the InventorsG. M. Adelson-VelskiiGeorgy Maximovich Adelson-Velskii, was born on 8 January, 1922 in Samara, Russia. He is a Soviet mathematician and computer scientist. Along with E.M. Landis, he invented the AVL tree (“AV” in “AVL” tree stands for Adelson-Velskii) in 1962.

In 1965, he headed the development of a computer chess program, which evolved into Kaissa, the first world computer chess champion.

He currently resides in Ashdod, Israel.

E. M. LandisEvgenii Mikhailovich Landis, was born on October 6, 1921, in Kharkiv, Ukrainian SSR, Soviet Union. He was a Soviet mathematician who worked mainly on partial differential equations. He studied and worked at the Moscow State University.

With Georgy Adelson-Velsky, he invented the AVL tree datastructure (“L” in “AVL” stands for Landis).

He died in Moscow on December 12, 1997.

The TechniqueThe BST can be easily remodelled into an AVL tree by adding some extra functions that perform balanced insertion and balanced deletion. Some other helper functions that perform some trivial tasks are also needed. The balancing is done by a technique called “rotation”.

What is

rotation?

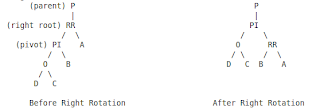

It can be best illustrated only pictorially. There are two ways to rotate.

i.

Right Rotation What happened simply looks as if:

1) node 34 was kept fixed as a “pivot”.

2) 34’s right child was rotated clockwise about the pivot (node 34 here).

3) there is a change in some links of 34 and 43.

On generalising,

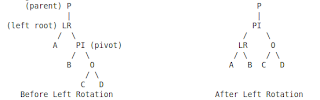

ii.

Left Rotation What happened simply looks as if:

1) node 37 was kept fixed as a “pivot”.

2) 37’s left child was rotated anti-clockwise about the pivot (node 37 here).

3) there is a change in some links of 21 and 37.

On generalising,

N.B. In both cases, the structure of the original BST will be changed to

that after the rotation. The rule for a balanced tree will be obeyed

in both the new structures.

Above mentioned are the two ways to perform rotation. When and how many times to perform them depend on the actual arrangement of the nodes in the BST.

There are four distinguishable imbalance patterns which occur repeatedly in a BST strucuture. These four patterns are recognized by finding out the “balancing factor” for each node. According to the balancing factor thus obtained, the proper sequence of rotations can be initiated.

What is the

balancing factor?

For any node in the BST, its balancing factor is given as:

BF (node) = (height of its left subtree) - (height of its right subtree)(It can be taken the other way round too, but appropriate sign changes have to be made in the balancing factor of nodes.)

BF (node 43) = +2

BF (node 34) = +1

BF (node 21) = +1

BF (node 20) = 0 (leaf)

The balancing factor of any node in a balnced BST will be an integer, in the range -1 to +1, including them. If the balancing factor of a node is found to be -2 or +2, it indicates that the BST is unbalanced at that node.

Next, the proper imbalance pattern is identified. Once it is done, the appropriate sequence of rotations are performed.

THE FOUR CASES OF IMBALANCEFor any number of nodes in a BST, only 4 patterns occur repeatedly. They are classified as the four cases according to which different sequences of left or right rotation or both must be performed. The four cases are:

LEGEND:

1 - node with BF +2 or -2, indicating need for rotation parent - the parent link of node 1

lroot and

rroot - the nodes which get “rotated” during each of the left

or right rotations respectively

pivot - the pivot node in a rotation

From the above figure,

LEFT - LEFT CASE : BF of a node is +2, BF of its left child is +1 or 0

LEFT - RIGHT CASE : BF of a node is +2, BF of its left child is -1

RIGHT - RIGHT CASE : BF of a node is -2, BF of its right child is 0 or -1

RIGHT - LEFT CASE : BF of a node is -2, BF of its right child is +1

Also,

LEFT - LEFT CASE : 1 right rotation

LEFT - RIGHT CASE : 1 left rotation, then 1 right rotation

RIGHT - RIGHT CASE : 1 left rotation

RIGHT - LEFT CASE : 1 right rotation, then 1 left rotation

AVL-SPECIFIC FUNCTIONSBalancing Factor -> Finds the balancing factor for the given node.

Left Rotation -> Perform left rotation, given a “pivot” and “lroot”.

Right Rotation -> Perform right rotation, given a “pivot” and “rroot”.

Balance -> Check balancing factor of given node. If the tree is

unbalanced at this node, identify the imbalance

pattern and perform rotation.

Balanced Insert -> Insert a new node, then travel from its parent to the

root, calling Balance() for each node in the path.

Balanced Delete -> Delete the node, then travel from its parent to the

root, calling Balance() for each node in the path.

If the deleted node was not a leaf, the path starts

from the replaced node itself.

Special Conditions to be Checked while Retracing in Balanced DeletionIf at some node, its BF is:

+1 or -1 : it indicates that the height of the subtree has remained unchanged,

and retracing can stop.

0 : height of the subtree has decreased by 1, and retracing needs to

continue.

+2 or -2 : needs rotation.

If BF of the node after rotation is 0, continue retracing since

height of this subtree has again decreased by 1.

N.B. In insertion, if the BF of a node after rotation is 0, it indicates that the

height of that subtree has remained unchanged, contrary to the similar

situation while deletion.

CONCLUSIONOnce the above extra functions are defined, the BST will be remodelled into an AVL tree.